Introduction to Word Embeddings

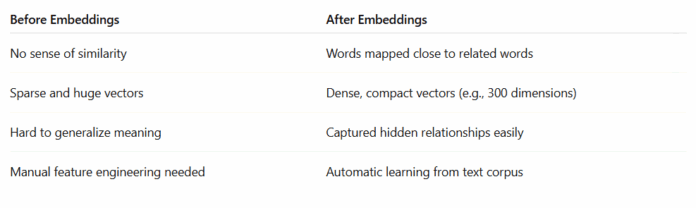

The revolution in natural language processing (NLP) was led by two groundbreaking techniques that changed the way we represent words in machines. These techniques enabled computers to understand the meaning and context of words, making significant progress in areas like language translation, text summarization, and sentiment analysis.

Key Techniques: CBOW and Skip-gram

Two main ideas were instrumental in this revolution:

- CBOW (Continuous Bag of Words): This method predicts a word based on its surrounding words. It’s like trying to guess a missing word in a sentence by looking at the words around it.

- Skip-gram: This technique does the opposite; it predicts surrounding words given the current word. For example, if you know the word "dog," Skip-gram would try to predict words that are often found near "dog" in texts, like "bone" or "pet."

Learning Semantic Relationships

The model learns to create word vectors that capture semantic relationships automatically. This means that words with similar meanings are represented closer together in the vector space. For instance, "king" and "queen" would be closer to each other than to "car" because they share a similar context and meaning.

Advantages of Word2Vec

The Word2Vec model, which includes both CBOW and Skip-gram, has several advantages:

- ✅ Fast to train

- ✅ Uses unsupervised learning, meaning it doesn’t need labeled data

- ✅ Captures the meaning of words naturally, based on their context

Global Context with GloVe

While Word2Vec focused on local context, another technique called GloVe also considered global co-occurrence statistics. GloVe creates embeddings by learning from how frequently words appear together across an entire corpus. This approach provides a more comprehensive understanding of word relationships.

Benefits of GloVe

GloVe offers additional benefits:

- ✅ Combined global and local context for a richer understanding of word meanings

- ✅ Produced even better semantic structure, capturing nuances in word relationships more effectively

Conclusion

The introduction of Word2Vec and GloVe marked a significant milestone in NLP, enabling machines to understand words in their context more effectively. These models have paved the way for more advanced NLP applications, from chatbots and virtual assistants to sophisticated language translation software. By capturing semantic relationships and considering both local and global contexts, these techniques have revolutionized how we interact with and understand natural language.