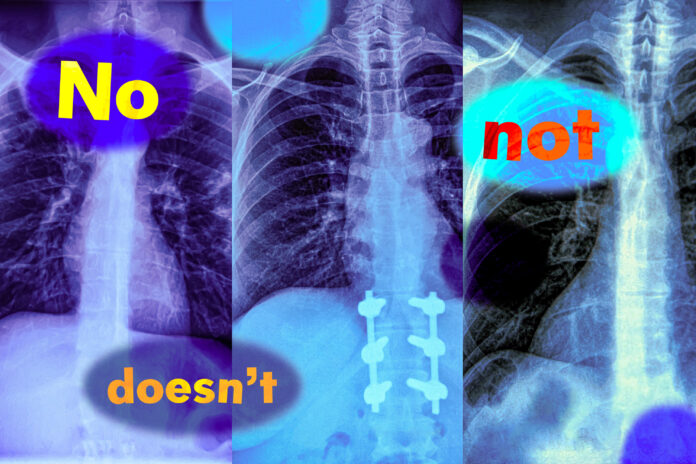

Understanding Negation in Vision-Language Models

Vision-language models are artificial intelligence systems that can analyze images and text to understand their content. These models are being used in various fields, including healthcare, to diagnose diseases from medical images. However, a recent study by MIT researchers has found that these models have a significant flaw: they don’t understand negation, which are words like "no" and "doesn’t" that specify what is false or absent.

The Problem of Negation

Negation is a crucial aspect of human language, and it can completely change the meaning of a sentence. For example, the sentence "the patient has a swollen tissue but no enlarged heart" is very different from "the patient has a swollen tissue and an enlarged heart." However, vision-language models often fail to understand the difference between these two sentences. This can lead to incorrect diagnoses and potentially harmful consequences.

Testing Vision-Language Models

The MIT researchers tested the ability of vision-language models to identify negation in image captions. They found that the models often performed as well as a random guess. The researchers designed two benchmark tasks to test the models: one that asked the models to retrieve images that contain certain objects, but not others, and another that asked the models to select the most appropriate caption from a list of closely related options. The models failed at both tasks, with image retrieval performance dropping by nearly 25 percent with negated captions.

Causes of the Problem

The researchers found that the models’ failure to understand negation is due to a shortcut called affirmation bias. This means that the models ignore negation words and focus on objects in the images instead. This bias is consistent across every vision-language model they tested. The researchers also found that the models are not trained on image captions with negation, which makes it difficult for them to understand the concept.

Solving the Problem

The researchers developed a dataset with negation words to help solve the problem. They used a large language model to propose related captions that specify what is excluded from the images, yielding new captions with negation words. They found that fine-tuning vision-language models with this dataset led to performance gains across the board. The models’ image retrieval abilities improved by about 10 percent, and their performance in the multiple-choice question answering task improved by about 30 percent.

Conclusion

The study highlights the importance of understanding negation in vision-language models. The researchers’ solution is not perfect, but it shows that the problem is solvable. They hope that their work will encourage more users to think about the problem they want to use a vision-language model to solve and design some examples to test it before deployment. By addressing the problem of negation, we can make vision-language models more accurate and reliable, which is crucial for their use in high-stakes settings like healthcare.