Introduction to Nature Image Datasets

Nature image datasets are vast collections of photos, ranging from pictures of butterflies to humpback whales. These datasets are incredibly useful for ecologists, as they provide evidence of organisms’ unique behaviors, rare conditions, migration patterns, and responses to pollution and other forms of climate change. However, searching through these massive collections can be time-consuming, making it difficult to retrieve the most relevant images.

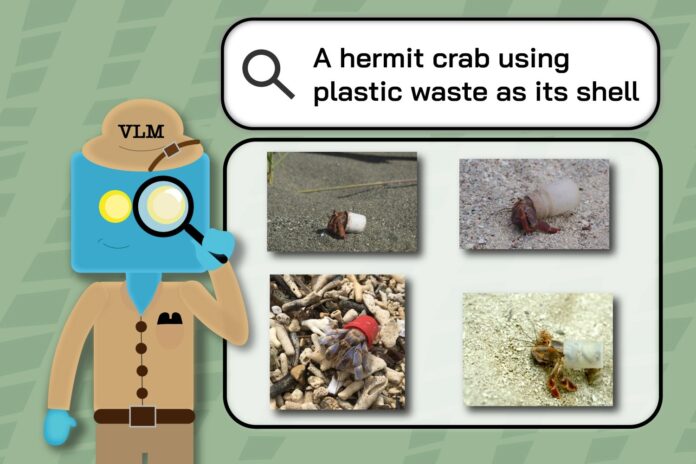

The Role of Multimodal Vision Language Models

To make nature image datasets more useful, researchers are turning to artificial intelligence systems called multimodal vision language models (VLMs). These models are trained on both text and images, allowing them to pinpoint finer details, such as specific trees in the background of a photo. But how well can VLMs assist nature researchers with image retrieval?

Evaluating VLM Performance

A team of researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), University College London, iNaturalist, and elsewhere designed a performance test to evaluate the effectiveness of VLMs. They created a dataset called "INQUIRE," composed of 5 million wildlife pictures and 250 search prompts from ecologists and other biodiversity experts. The team found that larger, more advanced VLMs performed reasonably well on straightforward queries about visual content, but struggled with queries requiring expert knowledge.

Challenges in Image Retrieval

The researchers discovered that VLMs struggled with technical prompts, such as identifying specific biological conditions or behaviors. For example, VLMs had difficulty finding images of a green frog with a condition called axanthism, which limits its ability to make its skin yellow. The team’s findings indicate that VLMs need more domain-specific training data to process difficult queries.

The INQUIRE Dataset

The INQUIRE dataset includes search queries based on discussions with ecologists, biologists, oceanographers, and other experts. A team of annotators spent 180 hours searching the iNaturalist dataset with these prompts, carefully labeling 33,000 matches that fit the prompts. The dataset is designed to capture real examples of scientific inquiries across research areas in ecology and environmental science.

Improving VLM Performance

The researchers believe that by familiarizing VLMs with more informative data, they could one day be great research assistants. They are working to improve the performance of VLMs by developing a query system that allows users to filter searches by species, enabling quicker discovery of relevant results.

Future Directions

The team’s experiments illustrated that larger models tended to be more effective for both simpler and more intricate searches due to their expansive training data. However, even huge language models struggled with re-ranking images, achieving a precision score of only 59.6 percent. The researchers aim to improve the re-ranking system by augmenting current models to provide better results.

Conclusion

In conclusion, while VLMs show promise in assisting nature researchers with image retrieval, they still have limitations. The INQUIRE dataset has highlighted the need for more domain-specific training data and improved performance on complex queries. As researchers continue to develop and refine VLMs, they may become invaluable tools for ecologists and conservationists, enabling them to quickly and accurately uncover the complex phenomena in biodiversity image data that are critical to fundamental science and real-world impacts in ecology and conservation.