Unlocking the Secrets of Large Language Models

Large language models (LLMs) like Claude have changed the way we use technology. They power tools like chatbots, help write essays and even create poetry. But despite their amazing abilities, these models are still a mystery in many ways. People often call them a “black box” because we can see what they say but not how they figure it out. This lack of understanding creates problems, especially in important areas like medicine or law, where mistakes or hidden biases could cause real harm.

The Importance of Interpretability

Understanding how LLMs work is essential for building trust. If we can’t explain why a model gave a particular answer, it’s hard to trust its outcomes, especially in sensitive areas. Interpretability also helps identify and fix biases or errors, ensuring the models are safe and ethical. For instance, if a model consistently favors certain viewpoints, knowing why can help developers correct it. This need for clarity is what drives research into making these models more transparent.

Anthropic’s Breakthrough

Anthropic, the company behind Claude, has been working to open this black box. They’ve made exciting progress in figuring out how LLMs think, and this article explores their breakthroughs in making Claude’s processes easier to understand.

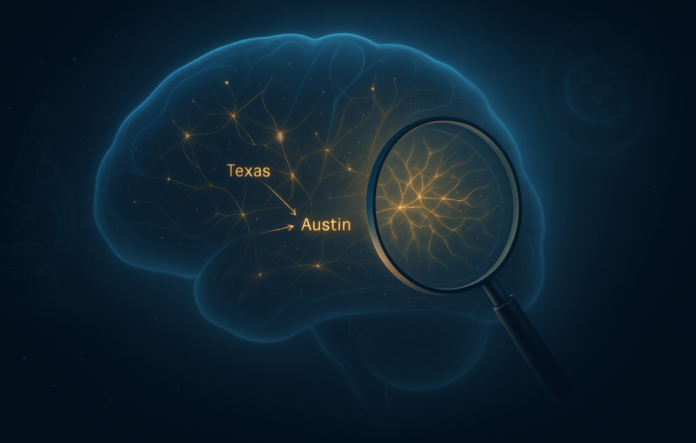

Mapping Claude’s Thoughts

In mid-2024, Anthropic’s team made an exciting breakthrough. They created a basic “map” of how Claude processes information. Using a technique called dictionary learning, they found millions of patterns in Claude’s “brain”—its neural network. Each pattern, or “feature,” connects to a specific idea. For example, some features help Claude spot cities, famous people, or coding mistakes. Others tie to trickier topics, like gender bias or secrecy.

Tracing Claude’s Reasoning

Next, Anthropic wanted to see how Claude uses those thoughts to make decisions. They recently built a tool called attribution graphs, which works like a step-by-step guide to Claude’s thinking process. Each point on the graph is an idea that lights up in Claude’s mind, and the arrows show how one idea flows into the next. This graph lets researchers track how Claude turns a question into an answer.

The Challenges

Even with all this progress, we’re still far from fully understanding LLMs like Claude. Right now, attribution graphs can only explain about one in four of Claude’s decisions. While the map of its features is impressive, it covers just a portion of what’s going on inside Claude’s brain. With billions of parameters, Claude and other LLMs perform countless calculations for every task. Tracing each one to see how an answer forms is like trying to follow every neuron firing in a human brain during a single thought.

The Bottom Line

Anthropic’s work in making large language models (LLMs) like Claude more understandable is a significant step forward in AI transparency. By revealing how Claude processes information and makes decisions, they’re forwarding towards addressing key concerns about AI accountability. This progress opens the door for safe integration of LLMs into critical sectors like healthcare and law, where trust and ethics are vital.

As methods for improving interpretability develop, industries that have been cautious about adopting AI can now reconsider. Transparent models like Claude provide a clear path to AI’s future—machines that not only replicate human intelligence but also explain their reasoning.