Introduction to Protein Localization

A protein located in the wrong part of a cell can contribute to several diseases, such as Alzheimer’s, cystic fibrosis, and cancer. With around 70,000 different proteins and protein variants in a single human cell, identifying proteins’ locations manually is extremely costly and time-consuming. Scientists can typically only test for a handful of proteins in one experiment, making it a challenging task.

The Challenge of Protein Localization

The Human Protein Atlas is one of the largest datasets, cataloging the subcellular behavior of over 13,000 proteins in more than 40 cell lines. However, it has only explored about 0.25 percent of all possible pairings of all proteins and cell lines within the database. This leaves a significant amount of uncharted space, making it essential to develop new computational approaches to streamline the process.

A New Computational Approach

Researchers from MIT, Harvard University, and the Broad Institute of MIT and Harvard have developed a new computational approach that can efficiently explore the remaining uncharted space. Their method, called PUPS, can predict the location of any protein in any human cell line, even when both protein and cell have never been tested before. This technique goes one step further than many AI-based methods by localizing a protein at the single-cell level, rather than as an averaged estimate across all the cells of a specific type.

How PUPS Works

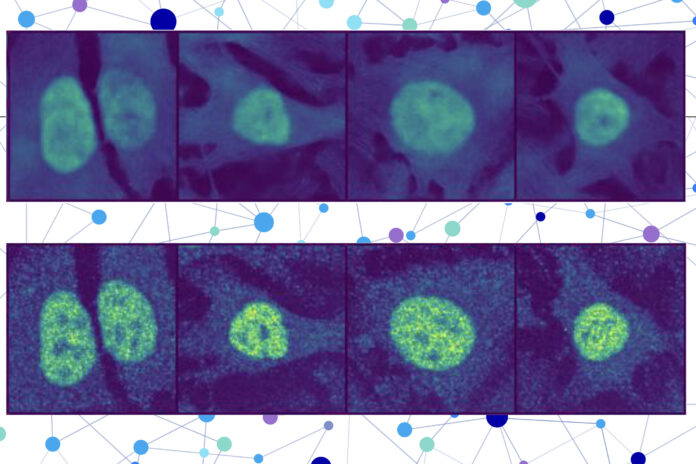

PUPS combines a protein language model with a special type of computer vision model to capture rich details about a protein and cell. The user inputs the sequence of amino acids that form the protein and three cell stain images — one for the nucleus, one for the microtubules, and one for the endoplasmic reticulum. Then PUPS does the rest, using an image decoder to output a highlighted image that shows the predicted location.

Collaborating Models

The first part of PUPS utilizes a protein sequence model to capture the localization-determining properties of a protein and its 3D structure based on the chain of amino acids that forms it. The second part incorporates an image inpainting model, which is designed to fill in missing parts of an image. This computer vision model looks at three stained images of a cell to gather information about the state of that cell, such as its type, individual features, and whether it is under stress.

A Deeper Understanding

The researchers employed a few tricks during the training process to teach PUPS how to combine information from each model in such a way that it can make an educated guess on the protein’s location, even if it hasn’t seen that protein before. For instance, they assign the model a secondary task during training: to explicitly name the compartment of localization, like the cell nucleus. This extra step was found to help the model learn more effectively.

Verifying PUPS

The researchers verified that PUPS could predict the subcellular location of new proteins in unseen cell lines by conducting lab experiments and comparing the results. In addition, when compared to a baseline AI method, PUPS exhibited on average less prediction error across the proteins they tested.

Future Directions

In the future, the researchers want to enhance PUPS so the model can understand protein-protein interactions and make localization predictions for multiple proteins within a cell. In the longer term, they want to enable PUPS to make predictions in terms of living human tissue, rather than cultured cells.

Conclusion

The development of PUPS marks a significant advancement in the field of protein localization. By providing a fast and accurate way to predict protein locations, PUPS can help researchers and clinicians diagnose diseases more efficiently, identify drug targets, and better understand complex biological processes. With its ability to generalize across proteins and cell lines, PUPS has the potential to revolutionize the field of protein localization and beyond.