Introduction to Robot Learning

When you ask a chatbot a question, you might not realize how much information it relies on to give a response. Similarly, engineers are working to build foundation models that can train robots to perform new skills like picking up and moving objects. However, collecting and transferring instructional data across robotic systems can be challenging.

The Problem with Robot Training

Teaching a robot by teleoperating the hardware step-by-step using technology like virtual reality (VR) can be time-consuming. Training on videos from the internet is also less instructive, as the clips don’t provide a step-by-step walkthrough for particular robots. This is where a new simulation-driven approach called "PhysicsGen" comes in.

What is PhysicsGen?

PhysicsGen is a system developed by MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Robotics and AI Institute. It customizes robot training data to help robots find the most efficient movements for a task. The system can multiply a few dozen VR demonstrations into nearly 3,000 simulations per machine. These high-quality instructions are then mapped to the precise configurations of mechanical companions like robotic arms and hands.

How PhysicsGen Works

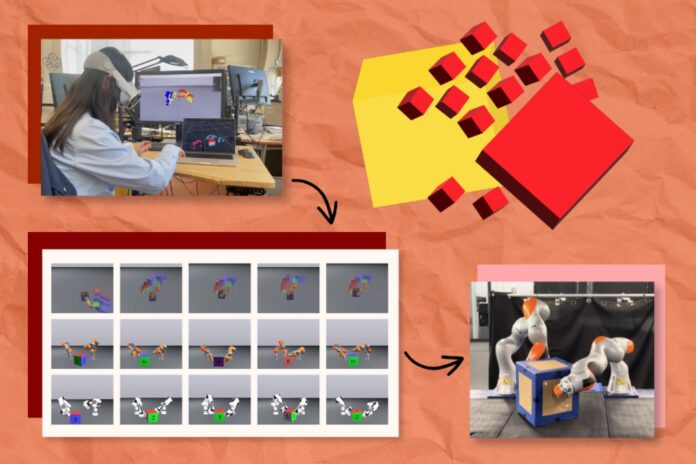

PhysicsGen creates data that generalize to specific robots and conditions via a three-step process. First, a VR headset tracks how humans manipulate objects like blocks using their hands. These interactions are mapped in a 3D physics simulator, visualizing the key points of our hands as small spheres that mirror our gestures. The pipeline then remaps these points to a 3D model of the setup of a specific machine, moving them to the precise "joints" where a system twists and turns. Finally, PhysicsGen uses trajectory optimization to simulate the most efficient motions to complete a task.

Benefits of PhysicsGen

Each simulation is a detailed training data point that walks a robot through potential ways to handle objects. When implemented into a policy, the machine has a variety of ways to approach a task and can try out different motions if one doesn’t work. This could eventually help engineers build a massive dataset to guide machines like robotic arms and dexterous hands.

Expanding Robot Capabilities

PhysicsGen’s potential also extends to converting data designed for older robots or different environments into useful instructions for new machines. The system may also guide two robots to work together in a household on tasks like putting away cups. By generating so many instructional trajectories for robots, PhysicsGen could help build a foundation model for robots, making task instructions useful to a wider range of machines.

Results and Future Directions

PhysicsGen turned just 24 human demonstrations into thousands of simulated ones, helping both digital and real-world robots reorient objects. The researchers tested their pipeline in a virtual experiment where a floating robotic hand needed to rotate a block into a target position. The digital robot executed the task at a rate of 81 percent accuracy, a 60 percent improvement from a baseline that only learned from human demonstrations.

Future Plans

The researchers plan to extend PhysicsGen to a new frontier: diversifying the tasks a machine can execute. They’d like to use PhysicsGen to teach a robot to pour water when it’s only been trained to put away dishes, for example. The pipeline doesn’t just generate dynamically feasible motions for familiar tasks; it also has the potential to create a diverse library of physical interactions that can serve as building blocks for accomplishing entirely new tasks a human hasn’t demonstrated.

Conclusion

PhysicsGen is a significant step forward in teaching robots to manipulate objects. By generating high-quality instructional data, the system can help robots find the most efficient movements for a task. With its potential to expand robot capabilities and convert data designed for older robots into useful instructions for new machines, PhysicsGen could play a crucial role in building a foundation model for robots. As the researchers continue to develop and refine the system, we can expect to see significant advancements in the field of robotics and artificial intelligence.